Why Gather In App Feedback?

Collecting feedback within the app uniquely helps us to understand how end users experience technology in the moment, bringing the voice of the end user to the center of product decisions.

| Benefits | Drawbacks |

|---|---|

Reach users in the moment.Get a snapshot of user sentiment regarding a specific moment or interaction in the product. Reach users at scale.Potentially reach the entire user base, not just a subset of users who respond to surveys. Hear from targeted groups of users.Leverage usage data to segment the user base to hear from specific audiences. Track sentiments over time.Surfacing the same engagement multiple times to a user over a period of time enables us to measure the impact of product and design changes on the user experience. |

Captures the

|

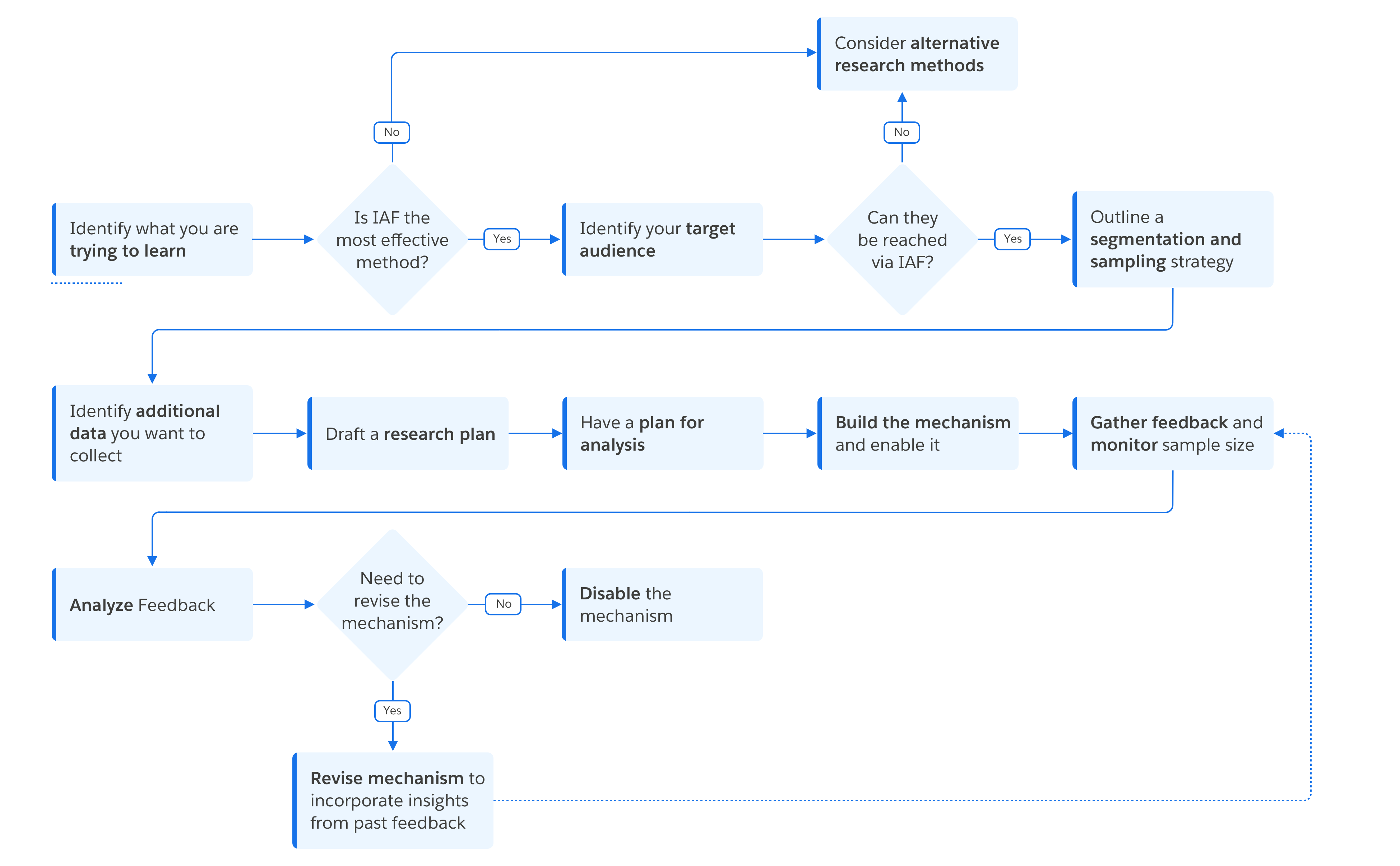

Planning the Scope of Your Project

There are a few steps to keep in mind as you embark on your journey of collecting feedback from users. While these guidelines cover design and research strategy for implementing effective feedback engagement, be sure to talk to your product team partners to understand implementation strategy and technical constraints early in the process.

Borrowed from our good friends at Salesforce.

Question Best Practices

- Limit length to 3-5 questions. The priority should be to collect any feedback at all rather than collecting the most comprehensive or detailed. If the user notices there are too many questions, the user may quickly close or abandon the engagement before leaving feedback.

-

Verbatim/open ended: Generally, these are best written as non specific prompts for the user to describe their experience.

Avoid asking the user to provide

suggestions for improving

the application. Instead, ask them to describe their experience. Phrasing could take some of the following forms: (1) Tell us about your experience with our [feature/app].; (2)Is there any feedback you would like to share?

- System or brand level satisfaction rating: These are best asked in the context of general proactive surveys or user invoked surveys, not task based proactive surveys.

- Task or feature level ease/effort/satisfaction: These are best asked in the context of task based proactive surveys.

- Reasons for satisfaction/dissatisfaction: If you're using a star or numerical rating question, follow up with a set of multiple choice options that include possible reasons for someone's rating, to contextualize the score.

User Centric Questions

The quality of the data you capture through feedback mechanisms directly correlates to the quality of questions you ask. Once you settle on a research objective, it's important to translate the objective into questions that capture the nuance of user behavior and intent. Keeping questions open ended will allow the user to give honest feedback without limiting the substance of what they may talk about. If you ask a star rating question or a binary yes or no question, be sure to follow up with an additional open text field or multiple choice option to help inform rating selections. Referencing specific moments during the experience such as the last time you used this feature also helps to ground the user in their experience rather than rely on subjective memory or opinions of your product.

| Research Need | User Centric Questions |

|---|---|

| Does a user like or dislike a feature | What do you think about this feature? Based on your visit today, how would you rate your overall experience using this feature? |

| Why did a user make a decision? | What were you hoping to have happen there? |

| Was something helpful? | Did you find what you were looking for? |

| What was something useful? | What was your goal today? Were you able to achieve it? |

| Was something accurate? | How helpful was this recommendation? Is there any information missing from this page? |

| What do you want to improve? What would you like us to know? | What's working well for you? Thinking about the last time you used this feature, what could have been improved? |

| What is the bug or factual error that a user would like to report? | What went wrong here? Help us understand what you were trying to do. |

| What is the idea that a user would like to share? | If you could change, add or improve just one thing about our product, what would it be? |